Iceotope director, David Craig, shines a light on the failings of traditional cooling technologies, and highlights the methods best equipped to handle modern-day cooling requirements at the edge.

The challenges facing edge computing, AI and edge-AI are pervasive once you start to deploy processing and storage capacity outside the data centre and into non-dedicated environments in close proximity to the point of use.

Challenges including the dense heat loads generated by GPUs to drive emerging applications, power availability and energy consumption, physical security and tamper risks, reliability and resilience in harsh IT environments, and maintenance outside the data centre, are just some of the obstacles that enterprises will need to overcome to deliver real-time value at the edge.

It isn’t a simple case of taking what currently works well in the data centre, tweaking it and packaging it up as an edge computing solution. Pushing traditional technologies, such as air cooling, from the data centre to the edge might seem convenient in the short-term, but it may not be effective in the medium-term and could prove to be a big and expensive mistake in the long-term.

The problem with traditional technologies

Traditional air cooling has served the industry well but pushing it to the edge is non-trivial. Once you take energy efficient IT out of the controlled data centre environment that provisions it, you not only lose control, you also lose efficiency. What’s more, your once enviable data centre PUE shoots through the roof as inefficiency is a major cause of wasted energy. To compound the problem, the lack of three-phase power supply is already causing concern at the edge.

Fans and moving parts are critical to cooling electronics with air, but are also failure points which need regular servicing and maintenance. The in-chassis vents and holes which enable airflow to remove heat, also expose the IT load to environmental contaminants – and people. Bearing in mind that people will be people and if an error can be made it almost certainly will be. So, you now have a security, resilience, energy and cooling problem at the edge.

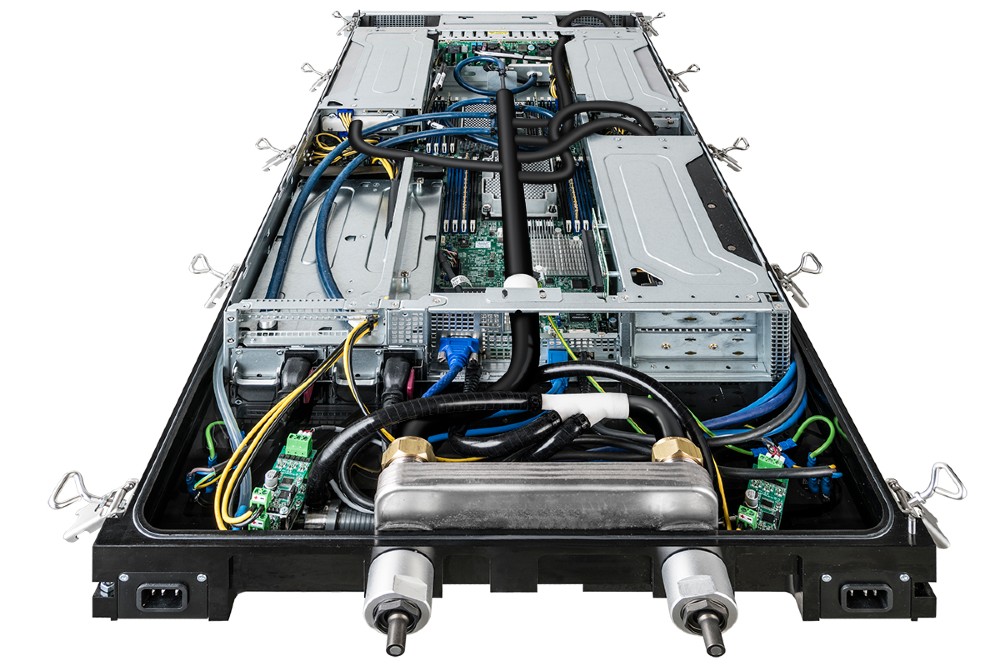

Warm water cold plates and similar devices can partially solve the problem of increasing chip densities. However, they still require air cooling to deal with the heat they do not recover – often up to 40%. The new hotter and denser chip technologies which are on the horizon today will impair the effectiveness of these cooling techniques even further.

To overcome these obstacles and enable the edge to deliver its full promise, we need to move on from the legacy mindset which says whatever works now will continue to work in the future. We need to implement new solutions that are edge smart and fit for purpose; technologies that can be deployed resiliently, securely and responsibly. Technology that will deliver the real value the edge offers and not the nightmare of mismanaged implementation.

The answer is chassis-level liquid cooling

Chassis-level liquid cooling combines the pros of both cold plate and immersion liquid cooling and removes the cons of both technologies. Chassis-level liquid cooling uniquely allows your compute to be deployed to the edge in a sealed and safe environment which makes it impervious to heat, dust and humidity, while endowing it with a high degree of “human error resilience” – or “idiot proofing”, as more seasoned veterans might wish to define it!

A sealed and silent chassis allows edge IT to be delivered in latency-busting proximity to the point of need with class-leading energy performance from day one. By reducing single points of failure and increasing resilience, chassis-level liquid cooling technologies significantly reduce the cost and risk of servicing and maintenance at the edge.

It is beyond doubt that new and emerging applications will play a major role in advancing our home and business lives. The heart of this is not just the way that next generation microprocessors and software are designed, but also the way that physical infrastructure such as chassis-level liquid cooling is utilised to ensure the scalability, reliability and efficiency of IT services for always-on users.

We can all enjoy the competitive advantages and real-time value that AI and other amazing technologies can deliver at the edge, but we need to do it in a way that keeps our planet safe and our data secure. The future is beckoning; don’t screw it up at the edge.