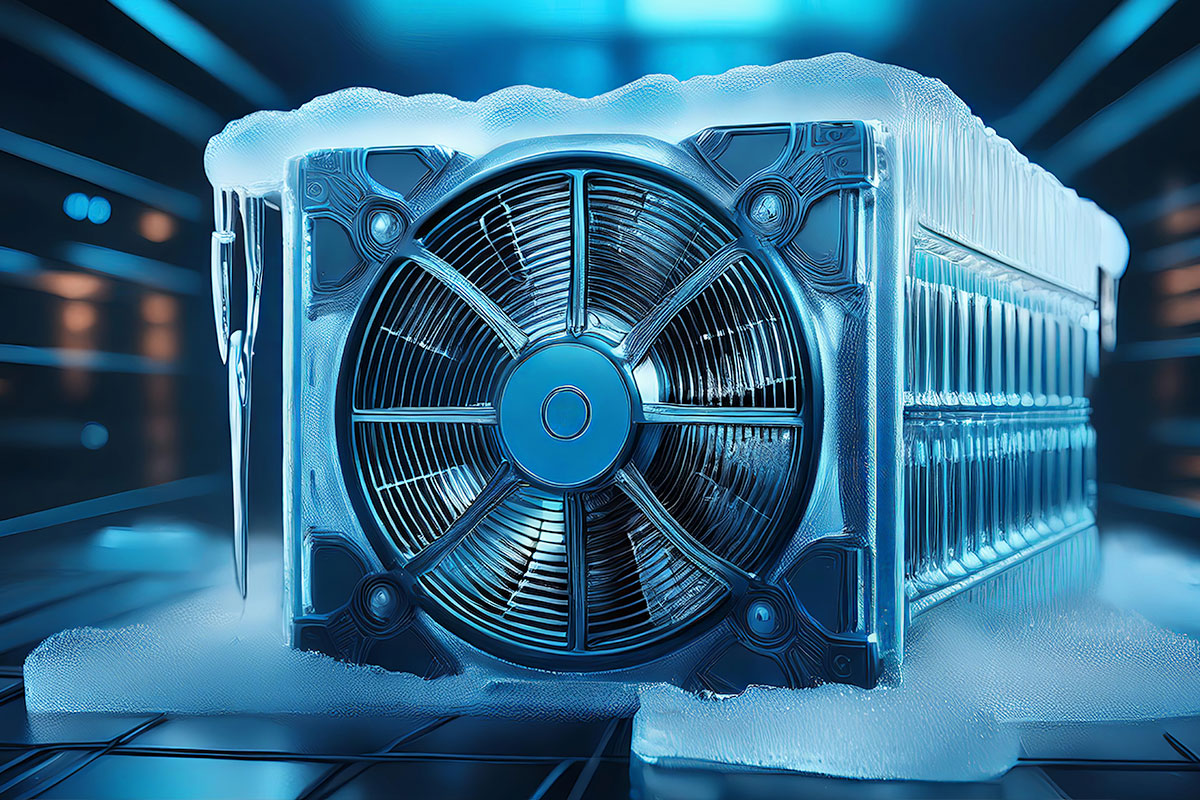

With traditional cooling methods struggling to keep up with the rapid growth of AI workloads, Peter Huang, Global Vice President – Data Centre, Thermal Management – at Castrol, asserts that data centres could be on the precipice of a cooling revolution.

Data centres stand at a critical crossroads. The immense growth of AI workloads is pushing traditional cooling infrastructure to their tipping point, with data centres being liable to experience failures if cooling infrastructures are pushed beyond their limits.

As the number of data centres in the UK and their associated workloads are set to increase, data centre leaders must act now – there must be a cooling revolution to ensure a future where we can keep up with the demands of next-generation technologies. Without a shift to more effective cooling infrastructures, the transformative potential of AI will remain constrained by current air cooling techniques.

AI’s increasing scale

AI is expanding at an astounding pace, with Goldman Sachs Research forecasting that global power demand from data centres will increase by 165% by 2030. This steady increase in demand and computing power will consume massive amounts of energy, generating unprecedented levels of heat that conventional cooling technologies will not be able to handle efficiently.

Approximately 40% of a data centre’s total energy consumption can currently be required for cooling, and as AI continues to scale up, more energy will need to be dedicated to effective cooling if we continue to operate using traditional air cooling methods. This shift in computing requirements demands an equally transformative shift in thermal management.

As well as making data centres more efficient, the AI revolution will demand that computer chips – which are becoming increasingly more powerful – can be maintained at optimum temperature. While traditional air-cooling systems remain effective for lower power density chips and racks, they struggle with anything over 50 kW. With future requirements approaching 1,000 kW, enhanced cooling infrastructure will be essential for data centres to keep pace.

Increased workloads and power densities will only grow as AI adoption continues at pace. With current infrastructure already struggling, data centre managers risk massive failures if they do not start to adopt new cooling methods now. To overcome this challenge and ensure AI development continues its rapid advancement, liquid cooling may offer the most promising solution.

The shift from traditional cooling methods

While traditional air cooling systems falter under extreme heat loads, liquid cooling technologies offer dramatically improved efficiency.

There are two main liquid cooling technologies in data centres that target heat at its source – immersion cooling and direct-to-chip cooling. Both enhance efficiency and enable data centres to manage significantly higher compute densities.

The immersion approach fully submerges servers in non-conductive fluid, while direct-to-chip systems use specialised heat sinks or cold plates to deliver liquid coolant – either water or specially-designed fluids – directly to the components generating the most heat.

Companies like Nvidia are advancing the use of direct-to-chip cooling, developing specialised water-cooled rack specifications for their high-performance chips. While this signals an industry shift toward liquid cooling as an effective method to handle extreme thermal loads, the use of water in some liquid cooling infrastructures is on track to contribute to water shortages globally.

The level of water consumption needed to adequately cool AI chips is worryingly high. Current projections suggest that global AI operations could consume up to 6.6 billion cubic meters of water by 2027 – equivalent to nearly two-thirds of England’s total annual water consumption.

In regions like Sussex, Cambridgeshire, Suffolk, and Norfolk, water scarcity is already a major issue. While many are hoping that the use of water in cooling infrastructure can sustain the future of data centres and AI technology, the use of water itself may not be sustainable.

Could the answer to water scarcity and efficient thermal management be immersion cooling?

Immersion cooling could emerge as a superior alternative to water-based liquid cooling, addressing the resource constraints that threaten AI’s future. Immersion cooling utilises specialised dielectric fluids, drastically reducing water consumption while delivering superior thermal performance.

In a recent study, 74% of data centre leaders surveyed believe that immersion cooling is now the only option for data centres to meet the current computing power demands, with 90% thinking about switching to this method between now and 2030.

As the research demonstrates, organisations implementing immersion cooling could save at least 15,000 MWh of energy annually compared to conventional methods. Data centres could also stand to save 3.5 million litres of water every year by switching to immersion cooling – a dual benefit of environmental responsibility and operational efficiency that water-based systems simply cannot match.

However, this cooling revolution cannot be achieved in isolation. The transformation to liquid cooling demands collaboration and partnerships between technology providers, data centre operators, and hardware manufacturers to ensure the immersion cooling technology is being tested effectively before deployment, investing in R&D to be able to keep up with new AI technology requirements.

The path forward is clear, liquid cooling represents not just an alternative cooling method but an essential evolution to futureproof AI technologies and improve data centre efficiencies for years to come.