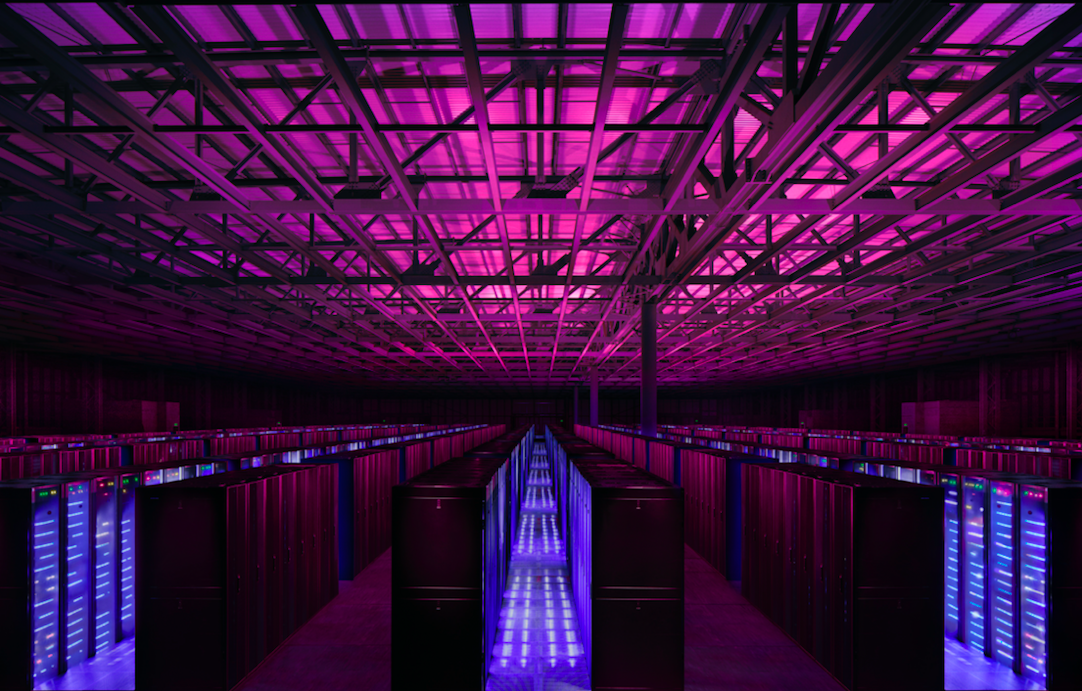

High Performance Computing puts pressure on colocation credentials. Simon Bearne, commercial director at Next Generation Data explores some key colocation considerations for meeting HPC expectations.

High Performance Computing (HPC) is now firmly established, evidenced by the increasing demand for compute power from existing users and the growing number of organisations identifying use cases. However, the thirst for compute power and relatively short refresh cycles is proving difficult for self-build on-premise installations in terms of financial returns and logistics (parallel build) as well as upgrading of plant and utilities to site (power).

Yet would-be HPC customers are already finding it challenging to find colocation providers capable of providing suitable environments on their behalf, especially when it comes to the powering and cooling of these highly-dense and complex platforms. Suitable colocation providers in the UK – and many parts of Europe – are few and far between.

For many HPC applications, be they academe, commercial research, advanced modelling, engineering, pharma, extractives, government or artificial intelligence, self-build has been the preference. But there is a gradual realisation that this is not core business for most. Increasing dependence on the availability of platforms is driving towards critical services environments, making it operationally intensive for in-house teams to maintain and upgrade these platforms – the facilities required often cost as much as the computers themselves.

While there are many colos out there, and many have enough power, it is the latter which can skew the relationship with space by potentially using up the facility’s entire available supply while it remains half empty.

Furthermore, the majority of colocation providers have little experience of HPC, and their business models do not support the custom builds required. Additionally, the cooling required demands bespoke build and engineering skills – many colos are standardised/productised so unused to deploying the specialist technologies required.

Cloud constraints

Faced with such constraints, the public or private cloud is a possible option but currently remains unsuitable for many HPC workloads, despite cloud computing’s premise of elasticity for providing additional at-will compute resource for specific workloads. Cloud may be fine for standard workloads, but for HPC use cases there are issues with data protection, control, privacy and security, not to mention compute performance, I/O and communications limitations.

HPC is considerably more complex as there is a need for different CPU and GPU server capabilities; highly engineered interconnects between all the various systems and resources; storage latencies to be maintained in the low milli, micro or even nanoseconds.

All this requires highly specialised workload orchestration that is not available on general public cloud platforms. Attempting to create a true HPC environment on top of a general public cloud is very challenging. Aside from the technical issues, trust would also appear to remain a significant barrier. If the decision is taken to go the colocation route, there are a number of key criteria to consider.

Power

Highly concentrated power to rack in ever smaller footprints is critical as dense HPC equipment needs high power densities, far more than the average colocation facility in Europe typically offers. The average colocation power per rack is circa 5kWs and rarely exceeds 20kWs, compared to HPC platforms which typically draw around 30kWs and upwards – NGD is seeing densities rise to 40, 50, with some installations in excess of 100kWs.

Achieving much higher power to space ratios will therefore be a major game changer in the immediate future, segmenting facilities that can from those that can’t. The ultimate limitation for most on-premise or commercial data centres will be the availability of sufficient power to the location from electricity utility companies.

So it is essential to check if the colocation facility can provide that extra power now – not just promise it for the future – and whether it charges a premium price for routing more power to your system. Furthermore, do the multi-cabled power aggregation systems required include sufficient power redundancy?

Critical services

There will always be some form of immediate failover power supply in place which is then replaced by auxiliary power from diesel generators. However, such immediate power provision is expensive, particularly when there is a continuous high draw, as is required by HPC.

UPS and auxiliary power systems must be capable of supporting all workloads running in the facility at the same time, along with overhead and enough redundancy to deal with any failure within the emergency power supply system itself. This is not necessarily accommodated in colocation facilities looking to move up from general purpose applications and services to supporting true HPC environments.

Cooling

HPC requires highly targeted cooling and simple computer room air conditioning (CRAC) or free air cooling systems (such as swamp or adiabatic coolers) typically do not have the capabilities required. Furthermore, hot and cold aisle cooling systems are increasingly inadequate for addressing the heat created by larger HPC environments, which will require specialised and often custom-built cooling systems and procedures.

This places increased emphasis for ensuring there are on-site engineering personnel on-hand with knowledge in designing and building bespoke cooling systems, such as direct liquid cooling for highly efficient heat removal and avoiding on board hot spots. This will reduce the problems of high temperatures without excessive air circulation which is both expensive and noisy.

Fibre connectivity/latency

Consider the availability of diverse high-speed on-site fibre cross connects. Basic public connectivity solutions will generally not be sufficient for HPC systems, so look for providers that have specialised connectivity solutions, including direct access to fibre.

While the HPC platform may be working well, what if the link between the organisation or the public internet and the colocation facility goes down and there is no capability for failover? As many problems with connectivity come down to physical damage, such as cables being broken during roadworks, ensuring that connectivity is through multiple diverse connections from the facility is crucial.

Other areas where a colocation provider should be able to demonstrate capabilities include specialised connections to public clouds, such as Microsoft Azure ExpressRoute, AWS Direct Connect and specialised networks such as Jisc/Janet. These bypass the public internet to enable more consistent and secure interactions between the HPC platform and other workloads the organisation may be operating.

Location

The physical location of the data centre will impact directly on rack space costs and power availability. In the case of colocation, there are often considerable differences in rack space rents between regional facilities and those based in or around large metro areas such as London.

Perhaps of more concern to HPC users, most data centres in and around London are severely power limited and relatively low in power capacity. The Grid in the South East is creaking and potential upgrade costs to bring more power to locations are astronomical. Out of town facilities are not facing such challenges. Fortunately, the ever-decreasing cost of highspeed fibre is providing more freedom to build modern colo facilities much further away from metro areas but without incurring the latency issues of old.

In summary, most HPC users have so far taken the on-premise route, but will now struggle with increasing costs and the challenges of obtaining more power to site, operating complex cooling, and ensuring the duplicated plant environments that critical services require.

Those considering colocation solutions as an alternative must thoroughly evaluate the ability of their potential data centre providers to offer facilities which are truly fit-for-purpose. NGD, for example, has a permanent presence of on-site consulting engineers and is geared to tackle custom HPC builds.