Margarita Patria, Principal, Chris Nagle, Principal, and Oliver Stover, Senior Associate, at Charles River Associates explore the energy challenge behind the data centre boom.

Skyrocketing interest in AI technologies is driving an unprecedented need for power and reshaping the energy landscape. Each user request to generate an AI-driven image or automate a task requires 10 times more power than what’s needed for a simple Google search. Tech giants like AWS, Google, Microsoft, and Meta are investing $50 billion per quarter in AI computing and data centres, pushing the boundaries of global delivery infrastructure.

This race brings significant challenges for electric utilities. A single hyperscale data centre can double some utilities’ demand forecasts almost overnight, causing seismic shifts in power needs. Utilities are understandably concerned. As a result, data centre developers are venturing beyond traditional hubs like Northern Virginia, searching for available generation and transmission capacity. Utilities across the country must now meet new data centre loads without compromising existing customers’ interest. Rising to the challenge will require creativity, flexibility, and transparency.

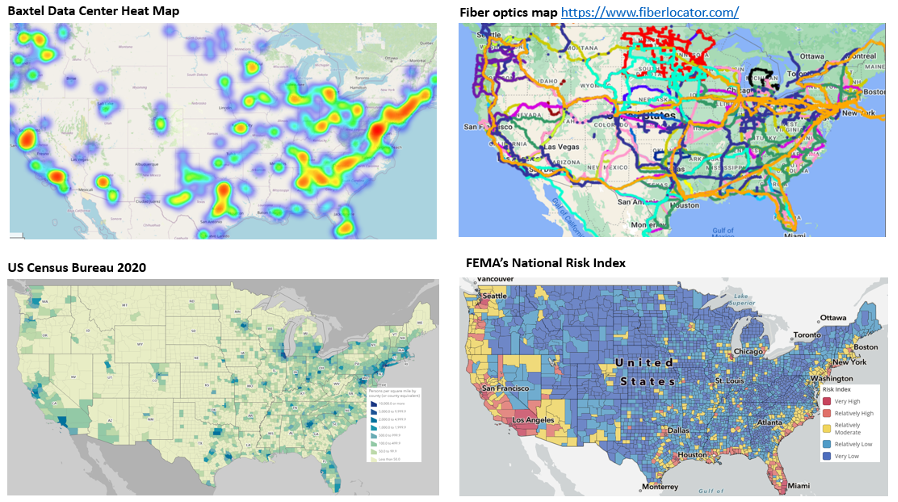

Figure 1 (below) presents a heat map of data centre locations across the US, highlighting key factors for site selection, including proximity to population centres, access to fibre-optic networks, and, where feasible, avoidance of disaster-prone areas. These factors, along with the availability of reliable, affordable, and sustainable generation resources, are increasingly important as developers expand into new regions.

As the heatmap illustrates, data centre load is spreading across the US, and utilities must be prepared to meet this growing demand. Utility planners need to rethink their approach to load forecasting, as traditional methods may no longer suffice in predicting these large, discrete data centre loads.

Unlike those of traditional customers, modern data centre loads come in sizeable blocks, measured in gigawatts (GW). To put this into perspective, 1 GW can power approximately 750,000 to 1 million homes. Planners in markets with significant data centre growth can attest to sharp year-over-year spikes in forecasts. Yet, many utilities still rely on average projections, risking significant over or under-forecasting. If a large project fails to materialise, these utilities are left grappling with overestimated demand.

Looking to the future

Forecasting faces two competing challenges: regulators’ concerns over inflated growth and real data centre developers’ plans. On one hand, the race to bring data centres online has given rise to the risk of ‘double counting’ potential growth. Developers often explore multiple utility partnerships within a single integrated market, and if each utility includes the potential development in its forecast, market-wide growth projection can become wildly inflated. This has led to skepticism about the stated forecasts.

On the other hand, forecasts have consistently trended up, and annual updates are proving inadequate. For instance, PJM has adjusted its annual forecasts to account for data centre load growth and incorporated discrete projects into its Transmission Expansion Advisory Committee’s planning. However, rapid development has pushed many forecasts out-of-date soon after they are published. Frequent, transparent updates will be essential moving forward to send clearer market signals and to build trust among stakeholders.

Beyond the challenge of accurate forecasting, utility planners and regulators are concerned about the long-term viability of data centre operations. If data centre loads ebb or disappear altogether due to shifting market conditions, huge capacity investments may become stranded, resulting in higher rates for other customers. This fear has led to heightened caution, with both utilities and regulators wary of investing in infrastructure without guarantees that operators will foot bills long into the future. But hesitation can become self-fulfilling. Data centres need quick paths to market and have significant flexibility to locate where those paths readily exist. Utilities that move too slowly may miss out.

In response to risks, utilities have begun exploring alternative service arrangements. For example, AEP Ohio has proposed a ‘take-or-pay’ tariff that contractually binds large loads to payments well into the future. Such approaches reduce risks of stranded costs but reduce business flexibility for operators. Future agreements will need to delicately balance the needs of each party – the ability to accelerate decision-making and improve outcomes for all stakeholders depends on it.

Once a utility and data centre customer reach an agreement, the focus shifts to reliably meeting the massive power demands. In the past, utilities with excess generation and transmission capacity could onboard data centres relatively quickly. However, with today’s hyperscale data centres requiring gigawatts of power, a few utilities have sufficient excess capacity without significant infrastructure investments. Utilities must now expand their capital project teams to manage these large-scale projects amidst the energy sector’s ongoing supply chain constraints, as many utilities across the country face similar demands.

Utilities also contend with the timing mismatch between when a data centre wants to go live and when the necessary infrastructure can be built. Some delay data centre onboarding until sufficient resources can be developed, while others rely on short-term capacity market purchases (frequently termed ‘capacity bridge’) as a temporary solution while building their own native energy resources.

However, if too many utilities pursue the capacity bridge strategy at once, it could drive up energy and capacity prices, straining the market’s ability to provide reliable power. Already, several utilities and markets, including PJM, have expressed concerns about the grid’s ability to onboard the level of growth that data centres require. Improved coordination between utilities, fast-tracking of energy-dense resources, and even restarting nuclear assets are some of the approaches being explored to ensure resource adequacy for both data centre and non-data centre customers.

A focus on decarbonisation

Finally, it’s important to acknowledge the role of decarbonisation in this new energy paradigm. Many data centre developers have ambitious decarbonisation goals, and utilities themselves are often working toward reducing their carbon footprints. But decarbonisation efforts sometimes conflict with the need to provide around-the-clock, reliable power. While natural gas remains a go-to resource for meeting capacity needs, utilities are increasingly looking to a future dominated by renewable energy, supported by expanded transmission systems capable of sharing these resources across wider markets. In this scenario, gas resources would only be used sparingly, keeping the grid reliable, while relying primarily on clean energy.

Some data centre developers are already taking bold steps in this direction, capitalising on green tariff programmes, like APS’ Green Partners Programme, to reduce the cost of additional renewable resources and minimise the use of natural gas. Other examples of creative approaches to providing reliable, sustainable energy are Microsoft’s recent partnership with Constellation Energy to help restart the Three Mile Island nuclear unit and Google’s power purchase agreement with NV Energy to tap into geothermal power. These partnerships represent a win-win-win for all involved: data centres can achieve their decarbonisation goals, utilities can pilot cutting-edge technologies, and consumers benefit from cleaner energy and limit the risk from new technologies.

In this new era of rapid load growth, data centre developers, utility planners, and regulators must collaborate closely to ensure that both data centres and the broader energy market receive affordable, reliable, and clean power. By improving forecasting transparency, managing infrastructure projects proactively, coordinating market strategies, and embracing emerging clean technologies, the energy sector can rise to the challenge of supporting an AI-driven and decarbonised future.